It’s time I wrote another one of these posts. I have been experimenting a bit with cooperative pathfinding during the last few weeks and it is working pretty well now.

What is cooperative pathfinding

Cooperative pathfinding is when instead of an agent just planning a path to the target and hoping it doesn’t crash in to any other agents the pathfinding algorithm will actually look at where all other agents will be in the future and make sure that the planned path does not collide with them. This is easier said than done because agents might change paths or new agents might be created while following the original path. Also the first agent that searches for a path can obviously not know where the other agents are going to be (since their paths have not been planned yet) but what essentially happens is that the first agent will plan its path and then the other agents will try to avoid crossing that path so it works well in practice.

There are many good papers on this subject. For example “Cooperative Pathfinding” by David Silver.

Here is a video showing how my experiments have turned out.

Why not local avoidance

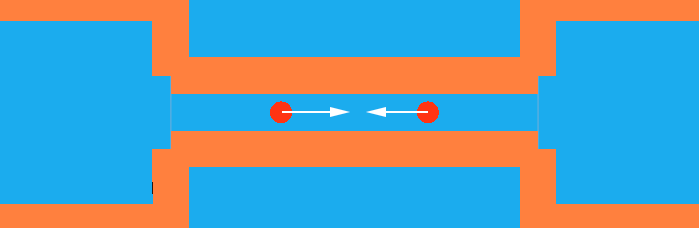

Local avoidance is another technique for avoiding collisions however there are some downsides to it. Local avoidance usually only considers obstacles just a few seconds into the future and the ones in close proximity. This leads to it sometimes becoming stuck in a local minima which it cannot get out of. For example if two agents meet in a very thin corridor it is unlikely to be able to resolve that situation which requires one of the agents to back out of the corridor to let the other one past. Local avoidance simply does not have that longer term planning. If cooperative pathfinding was used instead, one agent would not even enter the corridor but would wait at the end for the other agent to pass (see the video for an example).

How does it work

The way this type of cooperative pathfinding works is that instead of treating say every square in a grid graph as a node, it will treat every pair of a square and a time as a node. In other words instead of just searching space, it searches space-time. This way the pathfinding algorithm will not search just node at a position and check if that is valid, but it will search a node at a specific position and a specific time and check if that is valid (which it would be if it was not inside an obstacle and no other agents are in that node at that time). The calculated path is then a sequence of squares with specified times of when the agent should arrive at those nodes. Immediately after calculating the path the agent can then reserve all the nodes in that path so that no other agents will calculate paths that will lead to a collision.

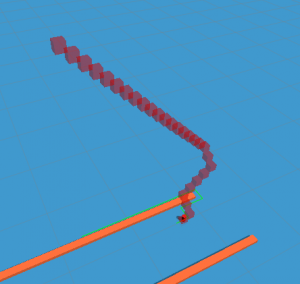

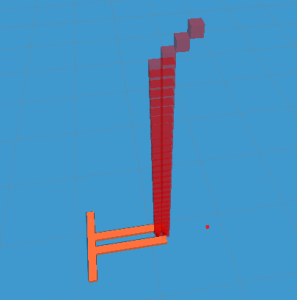

In the image to the right you can see an agent (red cylinder) following a path (green) and the reserved nodes in the path (red boxes). Reservations higher up indicate that they are reserved further into the future.

Now we start to see some downsides to cooperative pathfinding as well. All those nodes need to represented and searched, that means if we want to search say 20 ticks into the future (where a tick is the time it takes for the agent to move one square) we need to search 20 times as many nodes as we would have to if we had not used cooperative pathfinding. We will also use a lot more memory but it isn’t 20 times as much since we don’t need to store a copy of the node 20 times, after all the position of the node and a lot of other data is the same. In my experiments I have usually limited the number of ticks in the future it can search search to either 20 or 40 and that seems to cover most cases. I have also used aggressive heuristic optimizations to make sure that only the most relevant nodes are searched. Another limitation in the current implementation is that all agents need to move at the exact same speed. This can be worked around by instead of reserving one node at time T when moving forwards one can reserve both the node at T and T+1. This would be used for slow units that move at exactly half the speed of the rest. But as noted, I have not yet implemented this.

The system described above would work pretty well, but it would fail completely in some cases. Consider for example one agent that does not have a goal at all but just want to stand on the same spot and another agent that needs to move past this first agent. The first agent would have reserved all the nodes on that spot in the future so the second agent will just have to wait. It will resolve itself after a while because agents recalculate their paths and if the first agent had calculated its path say 3 ticks ago, there would be 3 nodes that were not reserved yet (remember that agents only reserve up to a fixed number of ticks in the future) that the second agent could use to move forward. This leads to very long wait times for the agents and overall it just feels sluggish even though it usually manages to resolve all collisions. An improvement to this is to add a very small penalty for waiting and making sure that the penalty is higher for nodes in the near future compared to nodes the agent will reach in a long time. So the agents will prefer to wait as far into the future as possible. In this example the next time the agent that just stands still recalculates its path it will notice that the other agent will move over the node it is standing on in the future, so it will decide to move out of the way quickly and then wait and then move back to its original position rather than waiting until right before the other agent will take its spot and move away then. Later when the agent with the goal searches for a path again it will find that the other agent has moved out of the way and it can proceed to move to directly to the goal. It takes a few round trips of path recalculations but in my tests this has reduced the waiting times a lot.

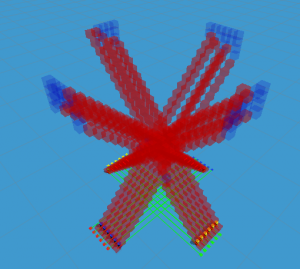

Another thing that can be done is to make it so that when an agent is standing still it doesn’t completely reserve the node it is standing on, instead it will just apply large penalty for other agents to move over it. This further reduces waiting times a lot, especially in crowded scenarios, however it makes it possible for agents to overlap in some cases. If that is acceptable in the game or not depends. In the video I have this enabled because otherwise it takes ages for the agents to find collision free paths when ordered to order themselves in a dense grid.

When will this be in the A* Pathfinding Project

I am not sure. There are a bunch of tweaks I need to do to get it stable. Also keep in mind that this type of cooperative pathfinding has a long list of limitations. So if you are thinking “that would be great for my game” then read this list first.

- All units need to move at the same speed.

- No physics can be used to push agents around.

- It is very grid based (well, it can be used with any graph, but it makes most sense on grid graphs).

- It does not take unit sizes into account. All units are assumed to occupy a single node.

- Only a single pathfinding thread can be used since paths need to be calculated sequentially (you can use more, but then you will have no guarantees that units will not collide).

- It does in no way guarantee an optimal solution or even that a solution can be found.

- No kinds of links can be used.

- I think that was everything, but I might have forgot some constraint.

Hey, I am very impressed with your experiments, we bought the pro version thinking you already have this implemented (we mixed this up with local avoidance), what are you thought on when this will be released? Thanks.

Hi

I don’t know unfortunately. I would like to implement it fully as soon as possible, but I am involved in too many other things, and there are a few other features for the A* Pathfinding Project that I am working on… so… I really don’t know 🙁

Hi

Yep. I just haven’t implemented multiple speeds, but I know it can be done. Units with larger sizes are more tricky though (well, NxN units where N is an odd number could work, but it comes with a performance cost and some slightly tricky code).

Yeah, optimal solution is likely NP-complete.

I have not looked at fringe search before, I will have a look at it. But reading on wikipedia it doesn’t seem to have that much information on it and it feels like there are many cases where it will lead to suboptimal performance (that’s just a hunch though, I haven’t tested it at all). HpA* is definitely a good technique. I have yet to implement it in this library because it is hard to keep everything flexible and working when you have multiple different graph types and path types. I use euclidean embedding to optimize the search however (see Game AI Pro (1 or 2, cannot remember) for reference).

I worked on that for a rts game and i have few remarks

You should be able to make units move at different speeds as far as they are multiple of the tick.

You could use different size of units as far as they reserve their slots in space-time map.

Optimal solution can be garantee only with “complete” pathfinding algo which are expensive in processing time therefore can’t be use in real time games.

If you use A* for your backsearch euristic, i suggest fringe search instead. It’s easier to resume and faster than regular A* on short path.

I also suggets to use HpA* for large scale map. (or any reduced graph technic: Pov…) It will give cooperative pathfinding way points that will lmit the search depth.