I have recently started to experiment with ways of achieving very high quality movement for characters.

Usually in video games or simulations one would request a path from the pathfinding system and follow that path by for example rotating the character around its pivot point and moving towards a point slightly further ahead on the path. This produces smooth, but not very high quality movement. For humanoid characters you really want it to look like the character is moving itself, not being dragged along a path and playing some animations that sort of correspond to how the character is moving. The Unity engine has an animation system called ‘Mecanim’ which has a feature called ‘root motion’. When root motion is enabled the animations will drive the movement of the character which results in very high quality movement. Unfortunately it is very hard to control that movement as you would have to figure out exactly which animation to play and for how long to get the character to move to a specific point, in many cases it might be impossible to move it to the position that you want without a very complicated and unnatural sequence of animations.

There should be a middle ground between easy to control movement and high quality movement that gives us the best of the two approaches. I have been inspired by a paper called “Planning Biped Locomotion using Motion Capture Data and Probabilistic Roadmaps” which approaches the problem by instead of planning a polygonal or spline path that the character should follow, it plans a sequence of footsteps that the character should take. I think this is a very promising approach with several potential improvements as well.

The main parts of the algorithm described in the paper can be summarized as follows (for more details, see the paper). For a visualization of some of the steps, see the video below.

- Plan a sequence of footprints and animation clip pairs using some kind of planner (in the paper they construct a graph of possible footprints with edges between them representing animation clips).

- Smooth the footprints while ensuring that the animations are not distorted too much and that they do not cause the character to intersect obstacles.

- Adjust the footprints to make them more similar to the original animation clips

- Optionally go to step 2 for another smoothing iteration (the paper uses between 2 and 4 iterations).

- Pick new animations after the footprints have been smoothed (or possibly after every smoothing iteration).

- Trace the root path (pivot of the character) of the animations if they would be played as is. This corresponds to using Mecanim’s root motion feature. The resulting path will unfortunately not match the desired path perfectly.

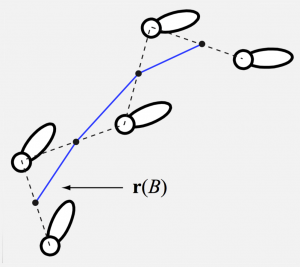

- Use the midpoints between the steps as a reference for the root path (see image).

- Transform the root path from step 6 to match the reference points of the desired path. This will give us a path that the character could follow.

- Use a retargeting step to optimize the resulting animation to make it more aesthetically pleasing and ensure that constraints such as the character’s feet actually being placed at the desired footprints are fulfilled.

Currently I have implemented step 2, 4, 5, 6, 7 and 8. Instead of step 9 a comparatively simple IK solver is used instead (see video) to place the feet where they should be. Instead of step 1 the sequence of footprints is determined manually and the animation clips are selected greedily (picking the best animation clip when looking at a pair of footprints at a time). The greedy selection algorithm often produces a sequence of animations that are very far from the desired path, however it is good enough to be used as input to the later stages. In the future it will be replaced completely.

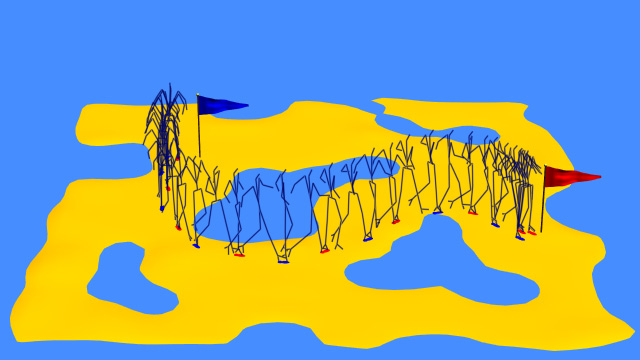

Below you can watch a video of a prototype of my current implementation.

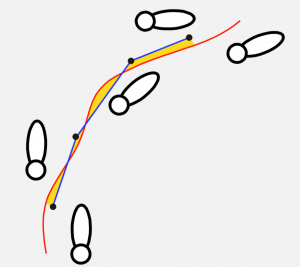

I am not very happy with the movement quality so far. Initially I had thought that the retargeting step that the paper uses (based on another paper called “A Hierarchical Approach to Interactive Motion Editing for Human-like Figures”) might be unnecessary and could be skipped if the transformation step was just improved a bit. However it turns out that it is not enough. I did make improvements to the transformation step as the paper only uses a series of rigid transformations (think of a metal chain that can be deformed, but the individual links cannot bend) while my implementation has incorporated blending weights into it and works in a very similar way to how skinned meshes are deformed in game engines and animation software. This results in a smoother path.

Some of you reading this may wonder if/when this will show up in the A* Pathfinding Project. Currently I have no plans for this, though it may of course happen if this experiment turns out well. Right now this is just research.

The next step would be to either try to get a path planner working (step 1) or implement the retargeting step (step 9). It turns out that in the retargeting algorithm there are a lot of complicated things going on and it seems I finally have to learn conjugate gradient descent, but hopefully that should be doable.

When i saw the video, i immediately thought that the foot placement could look better with a small offset and compressed on the inside of arcs, like this: https://i.imagebanana.com/img/y0yic5bk/walk.jpg . But maybe i just missed the point and this is part of what you’re trying to achieve.

Thank you Aron!!! keep doing that great work;